System design: Load Balancing

How to distribute traffic and keep your system humming

Load balancing is a critical technique for scaling software to accommodate thousands or millions of users. It helps to prevent server overload by distributing incoming network traffic across multiple servers. This can improve performance and response time, making software more reliable and user-friendly.

This article will discuss the different types of load balancing, how load balancing works, and why load balancing is essential. Let's dive right in!

Prerequisite

A basic understanding of computer networking would undoubtedly prove advantageous.

What is a load balancer?

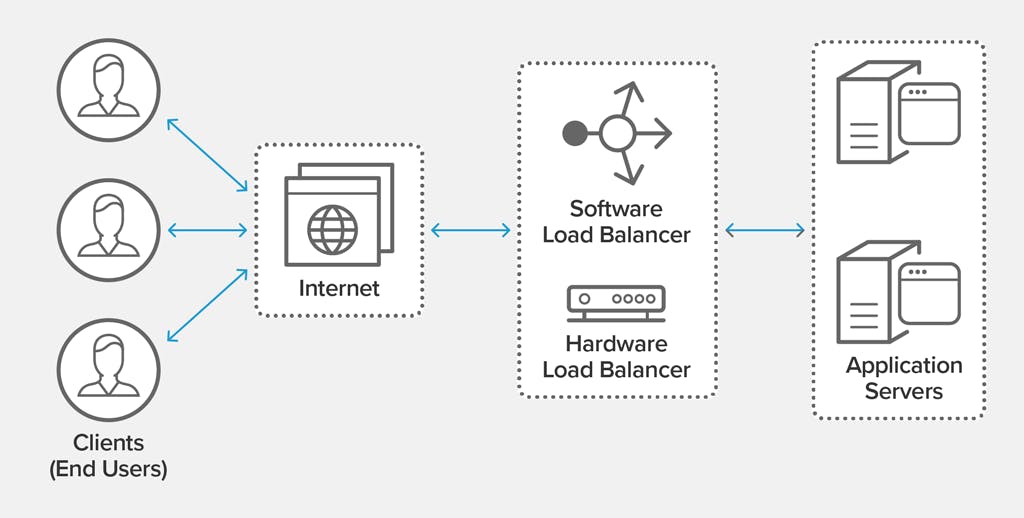

A load balancer is a hardware or software that is used to distribute incoming network traffic across a cluster of servers, improving availability, performance, scalability, and security. If a server fails, the load balancer redirects the network traffic meant for the failed server to a backup server.

Clients send HTTP requests over the internet to the public IP address of the load balancer, which routes the incoming traffic (based on the load balancing algorithm implemented) to the server cluster via a private IP address (the private IP address is used for internal communication within the cluster. It is not accessible from the public internet).

A load balancer typically operates at the Transport Layer (Layer 4) and sometimes the Application Layer (Layer 7) of the OSI (Open Systems Interconnection) model. The layer at which a load balancer functions depends on its type and capabilities.

Why is load balancing important?

A load balancer is an essential part of any system as it ensures that network traffic is distributed evenly across various servers. This helps prevent any particular server from being overburdened, leading to a bad user experience. Load balancing can enhance security, high availability, scalability, and overall performance.

Security - The load balancer stands as an intermediary between the public internet and the servers, thereby restricting direct access to the servers.

Scalability - When load balancing is implemented, it is easier to scale infrastructure. To scale, you simply add more to the cluster of existing servers. This is called horizontal scaling or "scale-out."

Performance - The load balancer distributes incoming network traffic across the different servers available, thereby preventing one server from being overloaded. This improves performance and increases response time.

Availability- A load balancer can also help to increase availability by providing redundancy. If one server fails, the load balancer can automatically redirect traffic to another server. This can help to ensure that the system remains available even if there are server failures. This is essential for preventing a single point of failure.

Types of load balancers

There are 3 main categories of load balancers:

Hardware load balancers - Hardware load balancers are physical appliances that sit between the client and the server, providing a layer of abstraction. They are typically used in high-traffic environments to distribute traffic across multiple servers, improving performance and scalability. Hardware load balancers are typically more expensive, but they can provide better performance.

Software load balancers - Software load balancers are software applications that can be installed on your machine. They use scheduling algorithms to distribute network load across multiple servers. An example of a software load balancer is Nginx, pronounced "engine-x." Software load balancers are typically less expensive, but they may not provide the same level of performance as hardware load balancers.

Virtual load balancers - Virtual load balancers (VLBs) are software-based load balancers that run on the cloud. They are cost-effective and easy to deploy, but they have limited scalability.

Load balancing algorithms

As you read this article, you might be wondering how a load balancer knows which server to direct network traffic to. Well, a load balancer uses a scheduling algorithm, or a combination of scheduling algorithms, to determine which server to send network traffic to. There are many different load-balancing algorithms, but I will discuss a few of the most common ones.

Round-Robin

In a round-robin algorithm, when multiple devices try to get resources from the server, the load balancer uses the round-robin algorithm to distribute network traffic sequentially. As you can see in the diagram below, the first request goes to the first server, the second request to server 2, the third request to server 1, and so on. The load balancer continues to do this in a loop, depending on the number of incoming traffic.

In situations where there is a high volume of requests, the load balancer may experience inefficiencies as it may need to wait for a server to complete processing a request before sending the next one to that same server.

Least Connection

In the least connection algorithm, the load balancer routes incoming network traffic to the server in the cluster with the lowest number of active connections or loads. This is why it is called the "least connection algorithm."

Least Response Time

In the least response time algorithm, the load balancer routes incoming network traffic to the server with the lowest response time. This ensures that the user experience is as good as possible, as requests are routed to the server that is most likely to respond quickly.

The least response time algorithm is often used in conjunction with other load balancing algorithms, such as round-robin or weighted least connection. This helps to ensure that traffic is distributed evenly across all servers, even if one server has a higher response time than the others.

The least response time algorithm is not always the best choice for every application. If the application is not very sensitive to response time, then other load balancing algorithms may be more appropriate.

Weight-Based

In weight-based load balancing algorithms, servers are assigned a weight based on their hardware capabilities. The load balancer then distributes traffic to the servers in proportion to their weights. This ensures that servers with more capacity handle a larger share of the load. The weight of a server is determined by a network administrator.

Below are the types of weighted load balancing algorithms:

Weighted response time

Weighted bandwidth

Weighted least connection

Weighted round-robin

Conclusion

Load balancing is an important technique for improving the performance, scalability, and availability of software applications. There are several different load balancing algorithms available, each with its advantages and disadvantages. The best type of load balancing algorithm for a particular application will depend on the specific needs of the application.

For further reading, check out:

Types of load balancing algorithm by Cloudflare.

Load balancing by TechTarget

Credits

- The memes and illustrations were obtained from Google.